Ordinary Least Square- PART 2

Let’s arrive at Normal Equation

Things to note about OLS Estimator

- OLS estimators are point estimators i.e. they only give a point value for each beta.

- OLS estimators are BLUE, i.e. these estimators are unbiased, linear and give least variance.

- OLS estimator are identical to Maximum Likelihood estimator(by which estimator is also asymptotically efficient and renders Cramer Rao Lower Bound) under Normality assumption of error

Derivation of the coefficients or parameters comes from

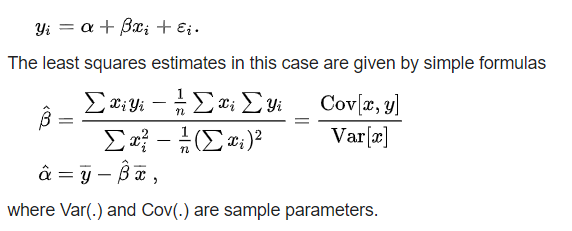

Now the question is how to determine the parameters of the linear regression model in continuation with our previous post on OLS estimator.

If we had only one variable we would have simply differentiated the loss function (sum of squared residual errors) given by

but we use normal equations to solve for beta(matrix betas in this case if we consider multiple variable).

Things to note here:

- xi matrix includes all 1 as a variable to get a corresponding beta as an intercept of response variable equation.

- S(b) is nothing but sum of square of residual errors as yi is actual recorded response variable and xi.T*b is predicted value of ith explanatory variable.

- Square in matrix form is treated like A² = A.T * A

- Idea remains same that we differentiate this loss function with respect to coefficient matrix to minimize the total squared error.

Result of optimization

where argmin implies value of beta which gives minimum value of S(b).

Another important point that can be missed here is that constant variance of error term is also a parameter and hence needs to be estimated too.

To see why it is this please go through this which gives a nice explanation.

For the sake of less complications, I have accumulated simply the results rather than laying down exacta mathematical steps.

Drop me a message if exact formulation will help, I would try to put it up here.

Resources:

https://en.wikipedia.org/wiki/Ordinary_least_squares#Matrix/vector_formulation